Classifier

Interactive Installation | June 2023

Selected Exhibition:

2023, IMA LOW RES THESIS, NYU Shanghai, Shanghai, China

Description:

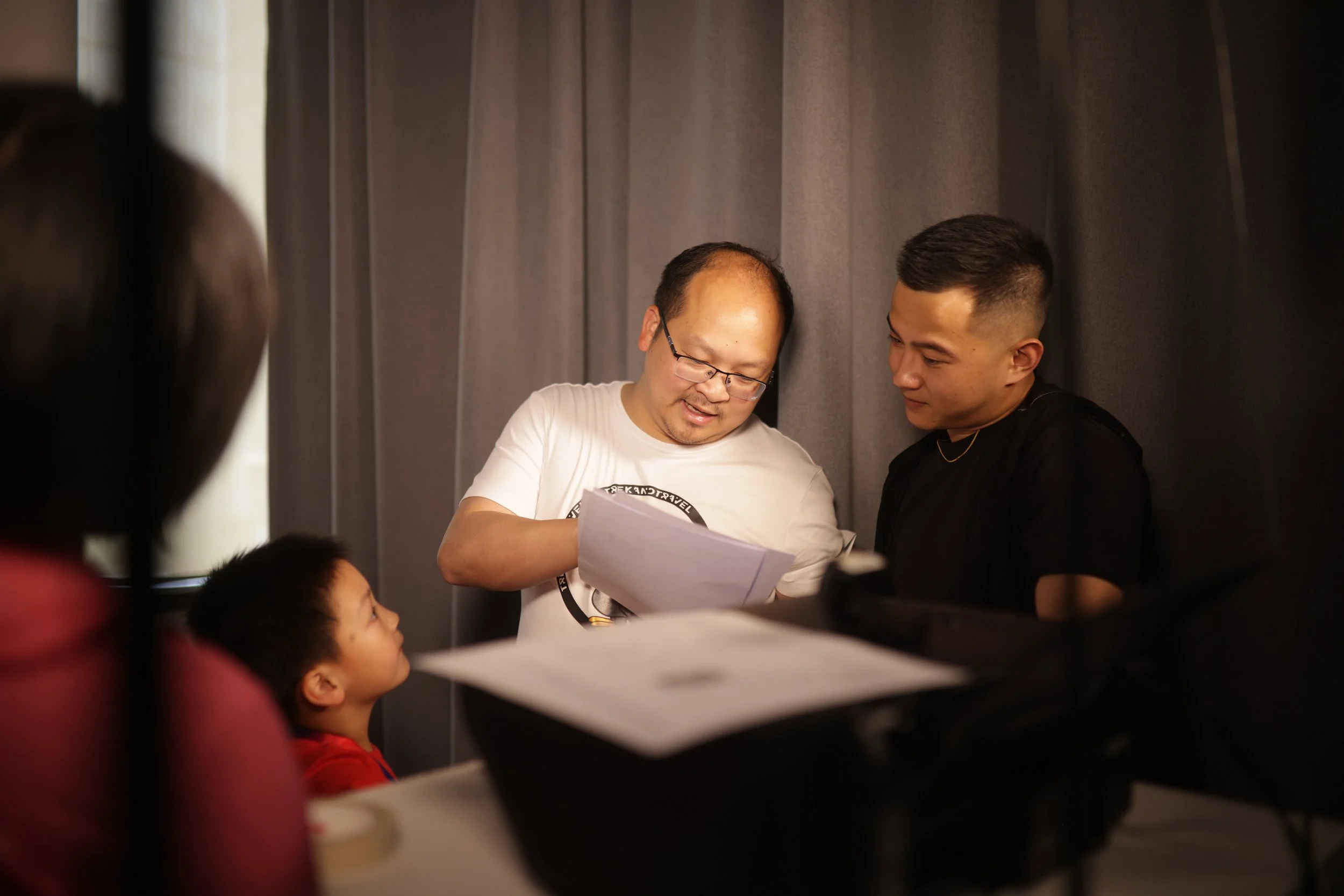

This project presents an innovative physical installation designed to provoke contemplation on the intricate nature of emotions. By employing a camera and a facial emotion recognition system, the installation records and analyzes individuals who participate, generating comprehensive prediction reports encompassing attributes such as age, gender, race, emotion, mental health, and overall score. These reports are printed out using a continuous printer, and individuals are assigned scores based on a meticulously developed algorithm. Participants can choose to take away the report or pin it on the wall for reference. The primary objective of this project is to encourage a deeper understanding of emotions by considering their diverse expressions and the potential risks associated with facial emotion recognition technology and artificial intelligence biases. Furthermore, it prompts critical questioning regarding the necessity of utilizing facial emotion recognition to predict inner feelings, assess dependability, conscientiousness, emotional intelligence, and cognitive ability, thereby envisioning a future where every facial expression is automatically analyzed.

Background:

Affective computing advancements have led numerous companies worldwide to focus on developing facial emotion recognition systems to enable artificial intelligence to predict human behavior. Major tech giants such as Amazon, Microsoft, and Google provide basic emotion analysis capabilities, while smaller enterprises like Affectiva and HireVue specialize in tailored applications for specific industries such as automotive, advertising, mental healthcare, and recruitment. Even MIT has dedicated affective computing groups striving to develop and assess innovative ways of integrating Emotion AI and other affective technologies to enhance people's lives.

Despite the existence of numerous articles highlighting the dangers and biases associated with facial recognition systems, many companies continue to employ them. This is particularly noticeable during the pandemic, as software like 4 Little Trees generates detailed reports on students' emotional states for teachers and even assesses motivation and focus. However, it is important to recognize that "It is not possible to confidently infer happiness from a smile, anger from a scowl, or sadness from a frown."

In a research paper by Barrett, Adolphs, Marsella, Martinez, and Pollak (2019), which reviews over 1,000 studies conducted over a span of two years, scientists from the Association for Psychological Science identified a wide array of expressions used to convey emotions. This variability makes it challenging to reliably infer someone's emotional state based on a limited set of facial movements. The paper highlights three significant limitations in scientific research that contribute to a general misunderstanding of how emotions are expressed and perceived through facial movements, thereby limiting the applicability of this scientific evidence: limited reliability, lack of specificity, and limited generalizability.

Technical Details:

The project's implementation involves an anti-technology stance towards facial emotion recognition systems. The classifier, developed using Python, TensorFlow, and other Open-Source API models, simulates a comprehensive health assessment process. This process encompasses data measurement, evaluation, emotion analysis, and inference of psychological well-being. Each user's facial and emotion recognition, psychological state analysis, comprehensive evaluation, and printing functions are iteratively performed to provide tangible paper reports for participants to take away. The algorithmic scoring system, tailored specifically for this project, forms the basis for assigning scores and delivering holistic evaluations and recommendations.

References:

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychological Science in the Public Interest, 20(1), 1–68. https://doi.org/10.1177/1529100619832930

Thomas Zerdick (2021). EDPS TechDispatch: Facial Emotion Recognition. Issue 1, 2021

On the Basis of Face (2020) by Devon Schiller.

AFFECTIVE COMPUTING: CHALLENGES - Rosalind W. Picard - MIT Media Laboratory Nathaniel Stern. Interactive Art and Embodiment: The Implicit Body as Performance

Caroline A. Jones. Sensorium Embodied Experience, Technology, and Contemporary Art

Tina Gonsalves - Chameleon

Atlas of AI - Book by Kate Crawford

Ploin, A., Eynon, R., Hjorth I. & Osborne, M.A. (2022). AI and the Arts: How Machine Learning is Changing Artistic Work. Report from the Creative Algorithmic Intelligence Research Project. Oxford Internet Institute, University of Oxford, UK.

Notes on Gesture - Martine Syms, 2015 (still).

Training Poses (Installation) - 2019 · Installation - Sam Lavigne

A face-scanning algorithm increasingly decides whether you deserve the job - Drew Harwell

Emotion recognition is mostly ineffective. Why are companies still investing in it? - Greg Noone

Emotion recognition: can AI detect human feelings from a face? - Madhumita Murgia

#sad - Cyrus Clarke - Artistic Installation